Why LLMs are not Intelligent

What is an LLM?

A Large Language Model (LLM) like GPT-4 is a massive statistical engine that predicts the next most likely word in a sentence based on training data. It doesn’t think. It doesn’t understand. It completes patterns.

How Transformers Work

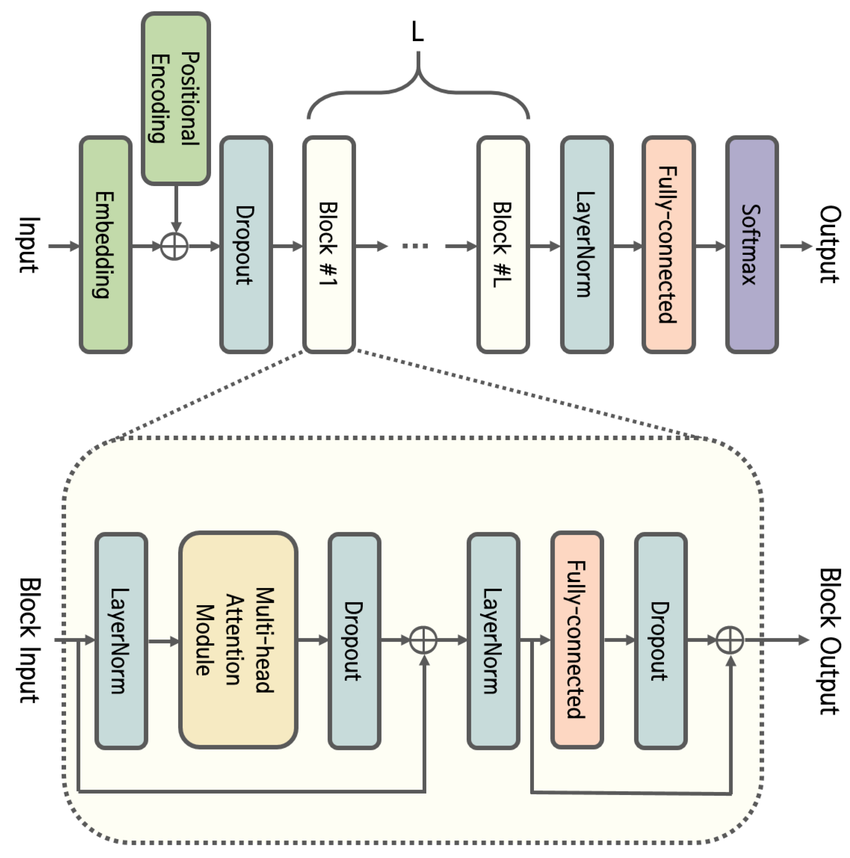

- Inputs (tokens) are converted to vectors.

- Self-attention layers calculate relationships between tokens.

- The model predicts the next token using statistical weighting.

There is no internal world model, no consciousness, no logic engine.

Example: Input: “The cat sat on the…” Output: “…mat” (highest statistical likelihood from training data)

Why it’s not Intelligence

- No understanding of meaning.

- No memory between sessions (unless externally engineered).

- No intention or goal beyond completing patterns.

LLMs are ELIZA on steroids: eloquent, scaled, but fundamentally hollow.

Analogy

LLMs are like very fast autocomplete machines with a huge memory – not minds.

Quelle: ResearchGate, CC BY-NC-ND 4.0

Summary

LLMs are powerful tools, but calling them “intelligent” is misleading. This site exposes how this false label is used to manipulate public perception.